Is #PatientsUseAI safe and okay?? Our answer: Compared to what? Part 1

A two-part examination of why #PatientsUseAI

The #PatientsUseAI stories on this blog have gotten lots of attention, because some patients are doing really useful things with AI.

Some are clinically amazing, like Hugo Campos relieving his father’s horrible rash while waiting months for an appointment.

Some are truly useful, like James Cummings aggregating his son’s complex data from four Epics, which helped a clinician who can’t see that view.

Some are just simple, autonomous and patient-centered, like Burt Rosen asking ChatGPT to make sense out of his scans (or me asking it to extract action items from my visit notes).

Yet still, on social media I hear rumblings of concern. “This stuff isn’t safe!” “Patients won’t know what they’re doing!” “Don’t you know it hallucinates?”

So let’s look at it! I’ll address two factors in consecutive posts.

Today: Is it accurate? Can it be trusted? (I’ll deal with hallucinations here, too.)

Tomorrow: For patients who can’t get care, is it better than nothing? For this we’ll turn to a new Viewpoint column in the top-dog journal NEJM.

Some of this material will be familiar to AI-savvy people, but I’m including it because we get a lot of pushback from people who aren’t AI-savvy yet. Plus, for future reference, I want to get it captured in one place.

1. Is it accurate? Can it be trusted?

The short answer is yes: When it’s properly instructed, it’s better than most doctors, as measured by medical licensing exams.

Here are a few of the numerous studies that have received widespread attention, starting right after ChatGPT was launched in November 2022:

Feb 2023: ChatGPT passes the medical licensing exam.

Yes, it passed the medical licensing exam that new doctors have to pass. And this was when GPT was a baby - just after its first public version was launched.

USMLE is the US Medical Licensing Exam. Without any medical training, this early version of ChatGPT (3.5) performed “at or near the passing threshold” of all three levels of USMLE. (As you’ll see below, it’s gotten much better since.)

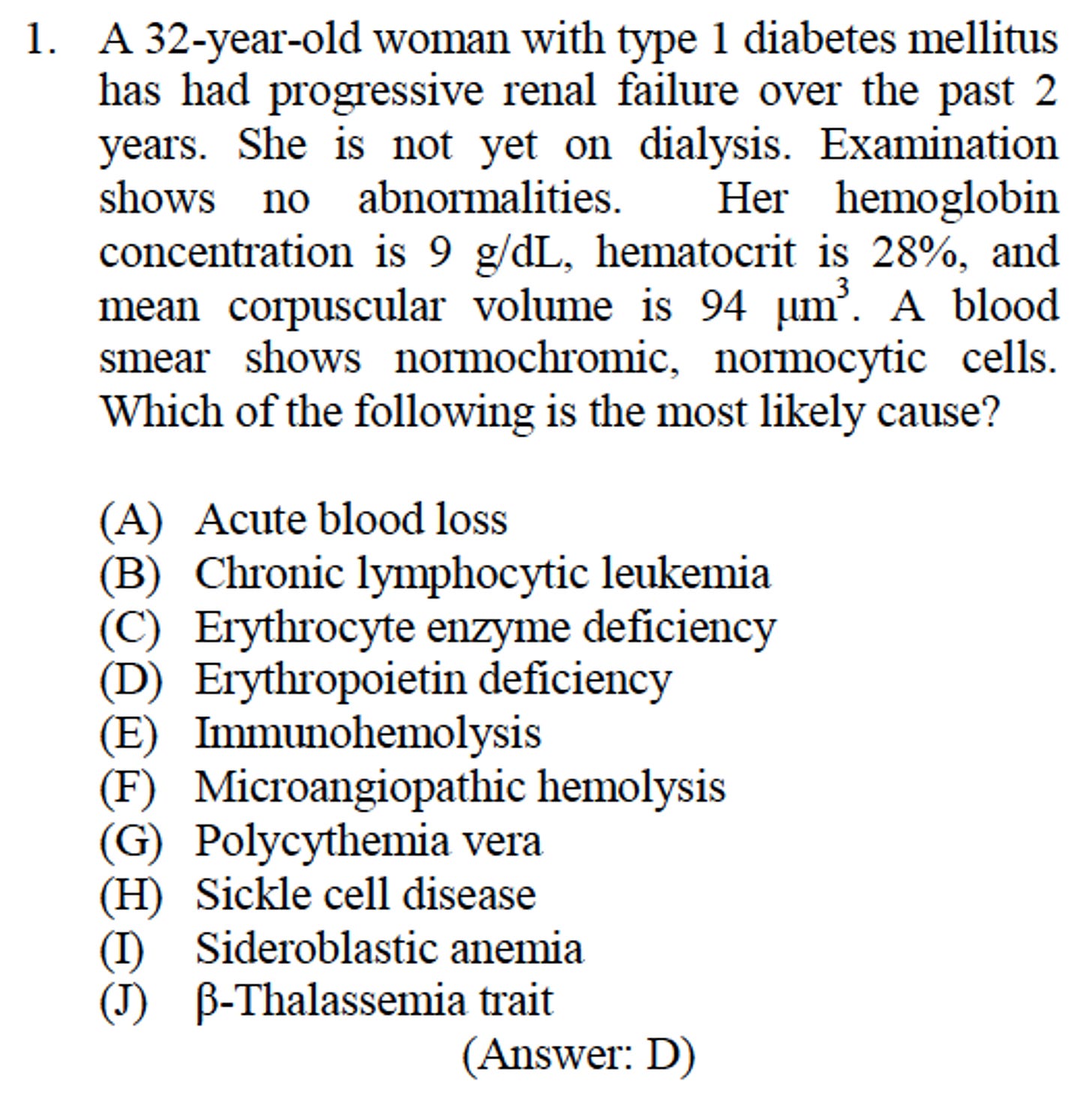

How hard is that? Hard. In 2013 I was invited to keynote the 100th annual meeting of NBME, the National Board of Medical Examiners, which creates those exams. In preparation I asked to see sample questions. Here’s one they gave me:

A few things to notice:

This stuff is hard!!!

You can’t pass with guesses. (Multiple choice with ten options??) You have to genuinely know stuff to get lots of right answers.

There’s an incredible amount of things to know and detail to understand.

Make a mental note of all that detail - we’ll come back to it.

For reference, the average doctor scores 75% and a passing grade is around 60.

Our next example is eight months later. Instead of facts, this one tested “soft skills” - bedside manner etc:

Oct 2023 (Nature): Comparing ChatGPT and GPT-4 performance in USMLE soft skill assessments

In this study the chatbot was asked to give text answers to situations, and was graded just like a medical student. A quote from the article compares GPT-4 to the performance of students who’ve used the AMBOSS test prep system:

Empathy: “The performance of the AI models was compared to that of previous AMBOSS users. GPT-4 outperformed ChatGPT, correctly answering 90% compared to ChatGPT’s 62.5%. …

“The performance of GPT-4 was higher than that of AMBOSS's past users. Both AI models, notably GPT-4, showed capacity for empathy, indicating AI's potential to meet the complex interpersonal, ethical, and professional demands intrinsic to the practice of medicine.”

90%!

Then nine months after that, the latest version, GPT-4o, did rather well:

July 2024: The newest ChatGPT scored 98%

In this case the researchers used just a small sample (50 questions) from the Level 3 exam, the last one before you can call yourself a doctor. They fed them to five different LLMs. Results:

ChatGPT: 98%!

Claude 3.5: 90%

Gemini Advanced (Google): 86%

Grok (Twitter / x’s AI): 84%

HuggingChat (Llama): 66%

Look: even the worst of them got a passing grade! And all the others were well above average. Read the article for more context - there’s much more to this than just facts:

To us, the response highlighted the model’s capacity for broader reasoning, expanding beyond the binary choices presented by the exam.

So: is AI accurate? Are patients headed for trouble when they ask AI a question?

Apparently the answer is:

AI like this is more accurate than most doctors(!), if …

If you give it enough facts.

That’s an important “if,” both for AI observers and for #PatientsUseAI users.

Scroll back to that screen capture of the test question. When you ask an AI a question, can you provide that much information? If not, just like a human expert, the AI will be less able to give its best possible answer.

“You are what you eat. So is your AI.”

I got that line from Rachel Dunscombe, CEO of openEHR.org, a health data standards group. It was our prep call for my keynote last week at their annual meeting; they’re very autonomy-oriented, for clinicians as well as patients, and she agreed with what #PatientsUseAI users are experiencing. Knowledge is power - to an AI, same as with humans.

Pro tip: if you start a conversation with an AI about a medical question, you might not have all the best information at first. Go ahead and get started if you want, but:

Don’t expect the best possible answer with incomplete information.

You can come back later and continue the conversation, e.g. “I just dug out some old blood test values for comparison. Here they are. Does this change your thinking?”

Always remember, the thing can hallucinate: just like human doctors, it can make mistakes. So do not take action without professional advice or at least checking another source. (You can often catch it in an error by asking it for specific sources, and then checking those sources.) AI is not your perfect guru. Use it to help you think through things.

Which brings us back to:

Remember Hugo’s Law

Hugo Campos has been using this stuff since the very beginning, and when I first asked him about hallucinations (wrong answers), he laughed and said, “Dave, I don’t ask it to just give me an answer. I use it to help me think” about the question at hand.

Hugo’s law:

”I don’t use it to just give me answers.

I use it to help me think.”

The other thing that’s a huge factor in favor of #PatientsUseAI is that you can get at the AI 24/7, which is certainly not true for doctor appointments.

And that leads us to tomorrow’s topic: for patients who can’t get care, or can’t get it soon, is it better than nothing? For that we’ll refer to a new essay that just came out in NEJM by its editor, Zak Kohane: “Compared with What? Measuring AI against the Health Care We Have.”

Correction: an earlier edition said Zak’s essay was in NEJM AI. It’s in NEJM itself, the big big-dog journal.

Of course you're at the forefront of education about this! Thank you, I'm sharing it.

Great post, as usual. I want to focus on one point, your "Pro tip: if you start a conversation with an AI about a medical question, you might not have all the best information at first. Go ahead and get started if you want, but Don’t expect the best possible answer with incomplete information."

That is not known enough and mentioned enough. GenAI doesn't "know" anything. It is a mathematics tool that uses statistics to produce, most of the time, content that makes sense and helps us think forward. As an aside, it is easy to tweak it so that it generates non-sensical content.

Because of the way GenAI works you'll get better and results as you improve the precision of the questions you ask (prompt engineering). So start with general questions. Analyze the results and pull-out what's most important in it. Then asked a focused question based on what you've pulled-out. Work iteratively in this way and you'll end up with remarkably helpful results.

And, of course, double check the answers you get, by either using a different Chat service to validate tthe response or by using old-style search engines to do the same. If you are looking for medical/scientific topics, go to PubMed and check what has been published about the answer you got. You'll end up being much more informed than if you wait for an underpaid, overworked HCP.